China's Structural Advantage in Open Source AI

Not just my words, Ion Stoica’s too.

One of the dumber hottakes that came out of the so-called “DeepSeek moment” earlier this year was that the timing of the model release was to upstage Trump’s inauguration and mess with the White House Stargate announcement.

DeepSeek R1, released on January 20, has indeed coincided with inauguration day. But it was also one week before the start of Chinese New Year – a surely more important deadline for every engineer in China to ship, release, monitor deployment for a few days, then go home to celebrate the biggest holiday of the year. (For American readers who don’t have a good mental framework of Chinese New Year, it is as if Christmas, Thanksgiving, and July 4th are all compressed into a two-week window.)

The only thing that the “DeepSeek moment” really messed with were the holiday plans of other Chinese AI labs, who were equally shocked by DeepSeek’s progress. One in particular, Alibaba’s AI team, apparently uttered a collective “holy sh*t”, called off everyone’s holiday travel plan, stayed at the office, and slept on the floor until a competitive model was shipped. The company chairman, Joe Tsai, revealed this at VivaTech in Paris a couple of weeks ago. Timeline matches up with the story; Alibaba shipped Qwen 2.5 Max on January 28, the eve of Chinese New Year when most of the celebration usually takes place.

Since then, multiple Chinese AI labs have been racing to release better models, competing not just with their American counterparts, but more fiercely with each other. More surprisingly, most of the best models coming out of China are open sourced with very permissive community licenses – Apache 2 or MIT – not corporate licenses like Meta’s Llama.

According to Artificial Analysis’s latest intelligence index, a combination of seven commonly-used model evaluation benchmarks, the top three open source AI models all come from China – DeepSeek, Qwen, and MiniMax (a startup AI lab backed by Alibaba and Tencent, rumored to IPO in Hong Kong later this year.)

As the saying goes, “one’s a dot (DeepSeek), two’s a line (Qwen), three’s a trend (MiniMax).” There appears to be something in the Yangtze River that makes Chinese AI labs more prone to open source, while almost all the leading American AI labs are on the closed source, proprietary route. This isn’t just my observation. Ion Stoica also sees multiple structural advantages that China has in open source AI, which he shared in a podcast interview last week.

Stoica is a star computer science professor at Berkeley, leading the famed Sky Computing Lab. He is also the co-founder of Databricks (one of the most valuable private tech companies valued at $62 billion), AnyScale (another data infrastructure startup valued at $1 billion), and LM Arena, a new startup he just co-founded to tackle the evaluation challenges of LLMs. He traverses the corridor between academia and industry with self-evident success, so his observation carries more weight than most.

Let’s unpack what these structural advantages are and their implications.

China’s Three Structural Advantages

Expertise: a factoid that Jensen Huang likes to share publicly every chance he gets is that China possesses 50% of the global AI research talent. This clean “50%” approximation is an obviously helpful talking point when he’s trying to lobby for more market access in China for Nvidia products. The source of this “50%” came from the MacroPolo project’s AI talent tracker research. A more precise number is actually 47% of the top undergraduate talent, or as Damien Ma who ran the MacroPolo project likes to say: “it's 47% of the top 20% talent” – the talent that everyone is fighting over.

In addition to producing more general AI research talent, China’s massive advanced manufacturing and biotech base is also fostering more specific industry expertise. More experts in different verticals is an under-estimated source of edge, when reinforcement learning is once again emerging as a key technique to improve AI models in real life use cases. Without domain expertise, it is hard to craft the correct reward model to properly apply RL to generate the most useful outcome.

Data: this advantage is not as simple as the cliche understanding of China being a large country with a lot of people with lackluster privacy control, thus having a ton of data available to train AI. It is more nuanced than that.

The corpus of Chinese internet data is constantly getting deleted due to strict online discourse control, so the size and quality of this dataset is unreliable, and a possible source of disadvantage which I wrote about a while back. Furthermore, large Chinese internet companies, from ByteDance to Tencent, tend to be walled-gardens. So the user generated data produced on these platforms are not easily scrapeable by others to train AI models.

China’s structural data advantage can be understood in two ways. One, and connected to the previous advantage in domain expertise, there is a lot of vertical industrial data being produced. These datasets are both large in quantity, because many Chinese industries tend to be more 5G-connected and IOT-ready, and high in quality, because they reflect real world use cases and problems. It is really the best type of training and evaluation data.

Two, while the overall Chinese internet data is less open and less plentiful, it is still a large source of information that most leading American AI labs have self-selected out of. Contrary to the consensus narrative, ChatGPT wasn’t “banned” right off the bat by China when it was released; OpenAI proactively decided to not make ChatGPT available in China and opted out of that market, in order to more cleanly align and shape the geopolitical landscape of AI (democratic AI vs authoritarian AI). Other closed source American AI labs more or less followed suit. So Chinese internet data is of little use or value to them. Chinese AI labs effectively have that dataset all to themselves, while their access to the global Internet data is unfettered.

Combining better industry specific data with Internet data in and outside of China makes for quite the data advantage.

Open source as default: there is a tight link between academia and commercial AI labs, because much of AI development is still pure research and pure science (if not science fiction). Stoica’s post-docs and PhD students, many of whom became his co-founders, are prototypes of this link. This link is even tighter and more fluid in China than in the US or elsewhere. And because open source is the default mode of all academic research and exchange, most Chinese AI labs have also adopted open source as their default mode.

Defaulting to open source is not inevitable. Most Chinese AI labs self-admittedly were behind their American counterparts when ChatGPT woke everyone up to LLM’s potential. Adopting open source is a time-tested strategy to both catch up and erode the competitive moats of whoever is in the lead. This was the core reason why Meta, who was also behind, chose to open source Llama two years ago.

What might have started as a defensive business decision has evolved into a flywheel of academia-industry collaboration that is starting to yield frontier-level progress. An open source AI lab with corporate backing can pay industry-level salaries to better attract and retain top academic talent, who can continue to do research and exchange ideas in the open. Releasing advanced open models, as opposed to previous generation ones, also helps with growing a stronger community of developers to contribute and improve the models. Open source helps diffuse AI technologies to more use cases faster, because they are free and customizable without any cost, except for the amount of work you want to put into that customization. The contribution benefit and diffusion power is not just local to China, but global by default.

Most Chinese AI labs seem to have internalized and executed on many of the secondary and tertiary benefits of open source. (ByteDance is an interesting hold out.) As a result, the academic-industry talent pipeline is even more fluid in China’s AI ecosystem than America’s.

What are the broad, macro implications of these advantages, especially as juxtapositions or foils to American AI labs?

Academia-Industry Flywheel vs Capitalism as Intermediary

One juxtaposition is the ways in which the academic-industry flywheel functions differently between the US and China.

When it comes to tech, the connection between academia and industry is nothing new. Silicon Valley, with its proximity to Stanford and Berkeley, is the archetype of this connection, which many countries around the world tried to replicate when building their own tech ecosystem. But there has always been an intermediary between academia and industry in Silicon Valley: capitalism. It comes in the form of either large pay packages from tech giants or seed investment checks from VC firms or angels, luring academics to become startup founders.

Ion Stoica’s career is full of successful examples where the force of capitalism branched him out into commercial success, while maintaining his research pursuits as an academic. More recently, Mark Zuckerberg’s aggressive AI talent poaching, from offering $100 million pay packages to using billions to buy out startups or portfolios of VCs outright, is the more extreme case of the free market intervening in the AI talent flow.

This capitalistic intermediary used to be a force in China’s tech ecosystem too, but less so today. VC, in its pure financial form, has mostly disappeared. Early stage funding has been replaced by funds with corporate or government backers, whose incentives and goals are different from simply maximizing financial returns. Talent poaching among competitors still exists, and always will, but the numbers are less eye-popping and less frequent. What has endured is a system that rewards and sometimes requires academics to make industry contributions in order to advance as academics. In other words, in many STEM fields in China, to become a tenured professor or respected scholar, publishing papers is not enough. Your research needs to have some real world impact too!

This incentive structure has been in Chinese academia for at least a decade. It is part of the university promotion system, especially the leading ones. For example, Zhejiang University, a leading research university in Hangzhou and the alma mater of Liang Wenfeng of DeepSeek fame, requires researchers to do tours of duty inside companies that the university is an investor in to demonstrate practical utility, in order to advance their academic career. Conveniently, the university has built a solid portfolio of companies under the Zhejiang University Holding Group, which spans industries from new materials and manufacturing, to biotech and energy. (For a good articulation of this policy, I recommend you listen to this episode of a popular Taiwanese podcast interviewing two Chinese tech insiders.)

Talent is now widely recognized as a bigger bottleneck of AI progress, as the scarcity in advanced GPUs eases. While the academia-industry flywheel exists both in the US and China, it appears that they operate in drastically different ways. In the US, the flywheel is lubricated by big pay days with the allure of fame and fortune. In China, the flywheel’s tailwind comes from a top-down push to future-proof real world diffusion. The former is decidedly more lucrative for the participants but also less collaborative. The latter looks more efficient, though perhaps at the expense of pursuing discoveries with no obvious usefulness today.

Shared Artifacts vs Redundant Infrastructure

Another angle that puts China’s embrace of open source and the US’s preference for closed source in direct contrast is this tension between building on shared artifacts versus developing in silos with redundant infrastructure. Stoica talked a lot about this point in his podcast interview.

In the world of technology R&D or academia, an artifact is akin to a building block. It can be a set of open model weights. A library that speeds up parallel processing in training. A toolchain to deploy models more efficiently or safely. It is a modularized piece of reusable technology that someone else can use or build on top of. Open source is effectively a technology building methodology that pieces together artifacts in the public domain to achieve new progress. Implicit in sharing artifacts via open source is that it reduces redundant efforts. Everyone who is working on the same general problem more or less knows what everyone else is working on, what elements have been solved and reduced into an artifact, and what problems are left, and thus make good directions for people to focus their energy on. It does take a lot of coordination and doesn’t work as perfectly in real life, but it is at least designed to be an efficient way to collectively advance progress and knowledge.

Working in silos in a closed source setting has the opposite effect. In Stoica’s estimation, all the leading American AI labs are doing more or less the same thing. Because they are functioning in silos, and “sharing” only happens if certain key people are poached from one lab to another (assuming no corporate espionage or violation of non-disclosure agreements), there are no shared artifacts to build on. Even Meta, the most open leading AI lab in the US, is resorting to a massive spending spree, not shared artifacts, to catch up.

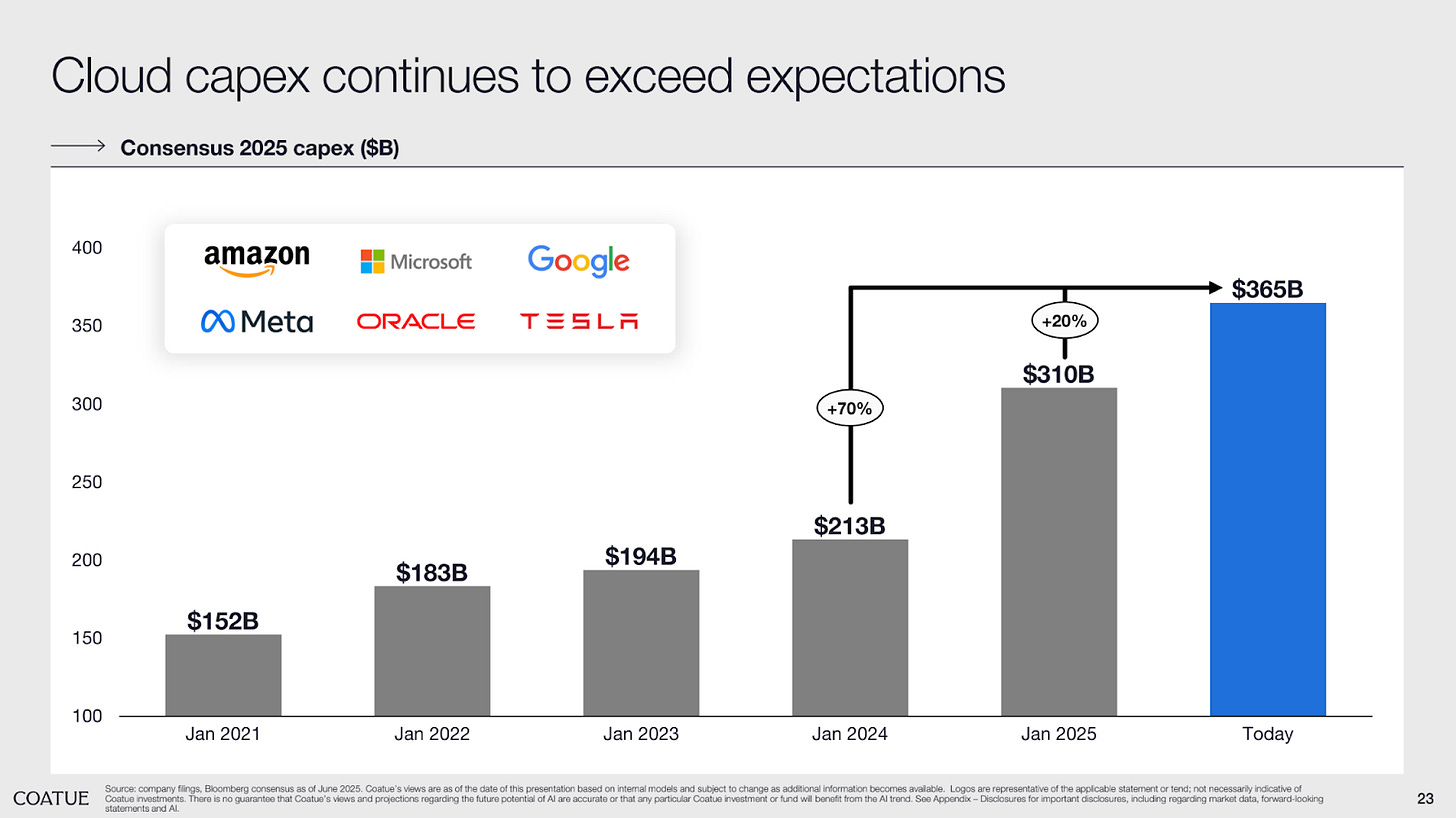

Meanwhile, a whole lot of infrastructure is being built to support these silos. According to Coatue, the 2025 cloud AI data center infrastructure spend is expected to reach a whopping $365 billion among the top six big tech spenders – Amazon, Microsoft, Google, Meta, Oracle, and Tesla. This estimate likely does not include the full extent of Stargate, the project that the “DeepSeek moment” initially embarrassed in the first place. A good percentage of all this capital expenditure, I suspect, is redundant infrastructure in service of the many siloed labs, in lieu of shared artifacts.

Chinese AI labs’ embrace of open source AI, along with the structural advantages that are enabling them to do so, does not mean there is no redundancy in their infrastructure. Alibaba, Huawei, Baidu, and DeepSeek, which is famous for running its own data center in order to acquire the skills to optimize its limited supply of export controlled GPUs to the limit, will continue to build more of their own infrastructure. Any speculation or prognostication of China forcing all its companies to pool their resources one day to build AI for the country falls into the category of dumb hottakes, when competition among the labs is a key catalyst of advancement. Don’t forget how our story started: the team that literally lost the most sleep over DeepSeek was Alibaba, not Meta, not OpenAI.

But it does make one wonder. When an otherwise very closed society, like China, prefers the transparency of open source, while an otherwise quite open society, like the United States, chooses the secrecy of closed source, what does this mean for AI as a technological transformation for the entire humankind?

Great write up - thanks.

One thing to add - it's immense joy, all these open source models, and the entire ecosystem that sprung around in short time. With many 1000s of enthusiasts in their homes, that would not have been part of this revolution otherwise. Reminds me of the prior IT revolutions: the 1st the PC revolution in the 1990s that put a computer on every desk (first; then in everyones pocket latter), and of the 2nd Internet revolution in the 2000s that connected (by now) almost 8B people 24/7 at almost zero costs in a global village. That too had bottom-up ecosystems of enthusiasts and tinkerers, learning by doing.

Now checking my disk what OSS AI LLM stuff I use daily. Software - llama.cpp, mlx, pytorch, LMStudio, Jan. Models - new default as of last night https://huggingface.co/unsloth/dots.llm1.inst-GGUF. Previous favourite was Qwen3-30B-A6B-16-Extreme-128k-context-Q6_K-GGUF, and few other MoEs variants of Qwen3-30B-A3B. With runner up OpenBuddy-R1-0528-Distill-Qwen3-32B-Preview2-QAT.Q8_0.gguf - but that one is non-MoE so slower for me on a MBP with lots of VRAM but lacking Nvidia gpu.

Looking forward, anticipating GGUF quants waiting to try https://huggingface.co/MiniMaxAI/MiniMax-M1-80k and https://huggingface.co/moonshotai/Kimi-VL-A3B-Thinking-2506. All Chinese. Only non-China labs I see I got are Jan-nano-128K, and Mistral magistral but they release small one not even the medium ones - se meh.

"not easily scrappable" means not easily scrapped, discarded, thrown away. More likely what's meant is "scrapeable", meaning capable of being scraped, retrieved from a web page.