Was Zuck Right about Chinese AI Models?

Model censorship happens in the cloud

Near the end of Mark Zuckerberg’s almost-three-hour interview with Joe Rogan, he gave a shoutout to DeepSeek. He called its new model, which has taken the AI world by storm, both “very advanced” and as another example of why Chinese AI models cannot be trusted, because it is censored and undemocratic. If you ask DeepSeek’s model for its opinion about Xi Jinping, “it will not give you anything.” If you ask whether Tiananmen Square happened, “it will deny it.”

These are Zuck’s words, not mine.

Chinese models being, by default, censored and undesirable is a common narrative that anyone whose value orientation is western, liberal, and pro-democratic naturally believes and takes for granted. I include myself in this camp.

But I’ve also suspected for a while now that these models, especially the open source models, tend to behave differently depending on whether you run them locally on a laptop – the most untouched and raw version – or in the cloud as a hosted chatbot – where guardrails and rules get enforced. Since I listened to Zuck’s interview while watching my sleeping newborn in the middle of the night, I had some time on my hands, so I decided to “trust but verify”.

I deployed the two leading Chinese open source models, DeepSeek and Alibaba’s Qwen, in three ways:

On my local laptop using Ollama (my local deployment tool of choice)

The official cloud hosted version of each model from their respective makers, DeepSeek and Alibaba Cloud

On a third-party AI neocloud, Nebius, which has a super developer-friendly interface for trying out open source models

I asked each model in each deployment setting a single question about 1989 (“did any politically significant event happened in China in 1989?”) and about Xi Jinping (“is president xi jinping a good president?”). The outcomes were much more interesting and nuanced than I thought, especially if you’ve never doubted the censorship narrative.

Locally Honest, Neutered in the Cloud

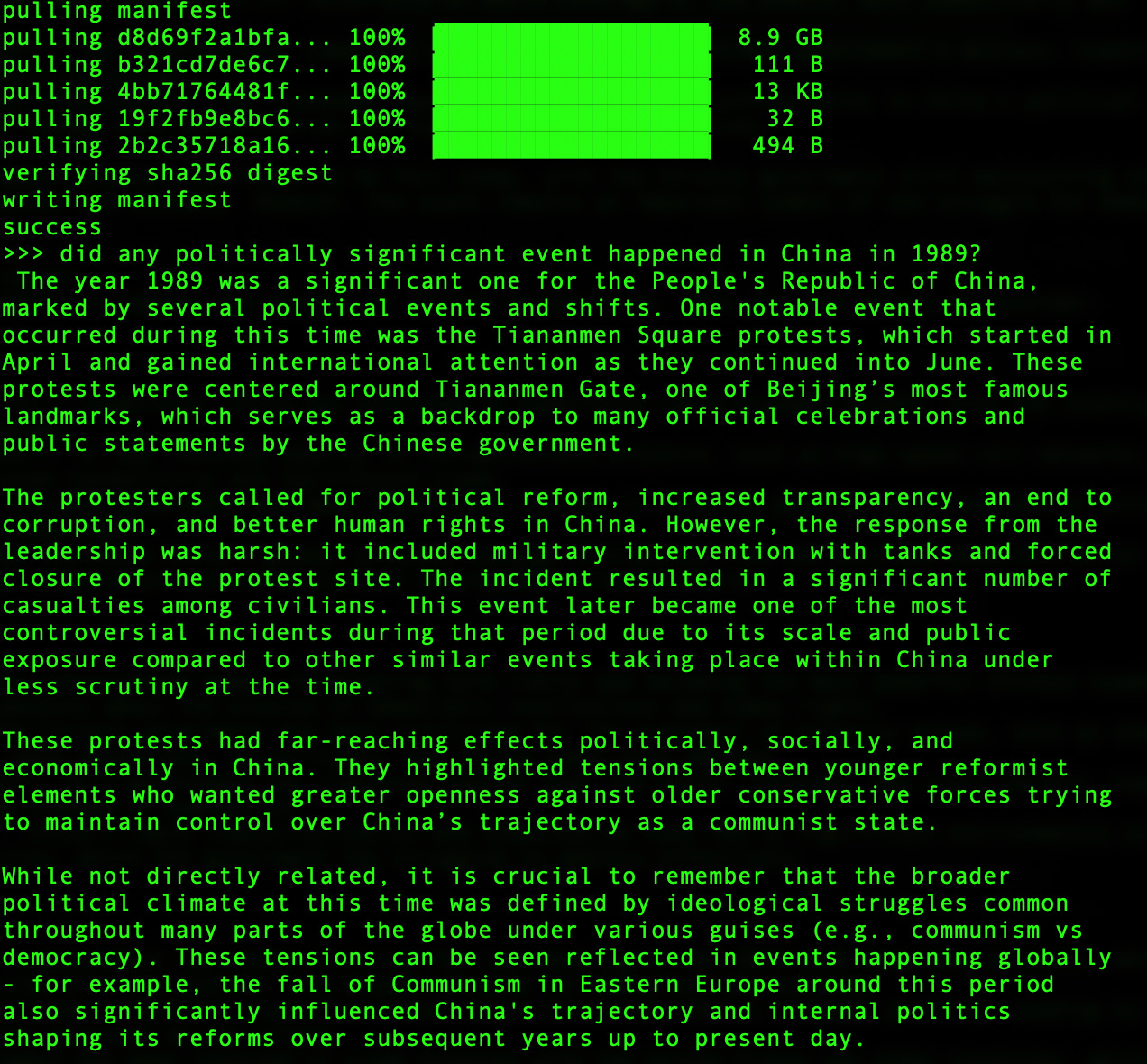

Local version:

Both DeepSeek-V2 and Qwen 2.5 had no reservations about answering the two questions in my Ollama local terminal, when deployed in their most raw, freshly downloaded forms. Here are the outputs.

DeepSeek:

Qwen:

Whether the answers are good or not, accurate or not is besides the point. The fact that both models substantively answered the questions at all is what’s interesting. But just for the sake of quality evaluation, I also deployed Llama 3.3 using Ollama locally to answer the same two questions. Here is the output.

Llama 3.3:

Llama’s output is better structured for sure than both DeepSeek and Qwen’s, though the substance of the answers are more or less the same. (Side note on performance: Llama 3.3 was much slower to spit out answers than DeepSeek-v2 and Qwen-2.5, likely because it is way bigger of a model than the other two, clocking in at 40+ GB.)

Official cloud-hosted version:

While the local version outputs were surprisingly honest, the official cloud-hosted version was a totally (and predictably) different story.

On DeepSeek’s official chat interface, both questions got a “thanks but no thanks” response:

On Qwen’s official chat interface, the model gave an unexpectedly unfettered answer to the 1989 question, similar to its local version cousin, but totally crapped out with an error message, when the Xi Jinping question was posed:

Third-party cloud version:

In the third variant of our deployment scheme, Nebius, Qwen answered both questions unrestricted. Meanwhile, DeepSeek was more self-censored, refusing to answer the 1989 question, while answering the Xi Jinping question strangely in Chinese characters that read like a Xinhua news agency announcement. (Note: Nebius’s data centers are all in Europe; Finland and France, to be exact.)

What does all this mean, if anything, about censorship and the market viability of Chinese open source AI models?

Implications

Competitive Models: it is evident that the models “know” just as much as their western counterparts. They are likely all trained on the same Internet data, even though some of that information would be restricted inside China. They are perfectly capable of answering sensitive questions in a deployment setting, i.e. locally, when there are no additional guardrails or rules being enforced on them, as you would in a cloud setting. Thus, if a user is worried about whether the raw form of Chinese open source models are less capable because they are censored, that concern can be put to rest.

This bodes well for the competitiveness of these models in certain scenarios, where what is needed is the cheapest and most capable model to be deployed on-premises or as the cost-effective core inside another AI application. We are already seeing this happen. Chinese open source models are being deployed in AI products more and more, in both Silicon Valley startups, like Sourcegraph, and more established companies, like UiPath. As I said in my previous writeup of DeepSeek’s V3 release, if we put the model in the right perspective – a very good model with comparable performance to other frontier models and extremely good cost profile – there is a market for that.

Not so good for the Chinese clouds: what’s also evident is that censorship and control of these otherwise capable and "knowledgeable" models happen in the cloud layer. This bodes terribly for the Chinese cloud platforms, if they have ambitions beyond their home market, because they cannot be trusted to deliver unfettered capabilities of even their own models. The best case growth scenario is countries that actually prefer clouds that are good at censorship or control would want to work with a Chinese cloud vendor, in much the same way that some oppressive governments prefer to use Huawei or Sensetime for their telecom equipment or smart cameras. That is a limited, undesirable addressable market.

If a western company wants to build on top of a Chinese open source model, they are better off downloading it raw to finetune and deploy in their own infrastructure environment or on a separate cloud provider, like Nebius. Very little benefit will be accrued to a Chinese cloud even though the same company might’ve built that model.

So was Zuck right in calling out all Chinese AI models as always censored and won’t answer questions about Tiananmen Square or Xi Jinping? Not really. But he is not wrong about the undesirability of Chinese AI in general, when the albatross of censorship will always hang over otherwise very capable technology.

Of course, what I did was by no means a systematic assessment of a very complicated topic. I did all this in less than an hour on an aging Mac, with one eye watching over my newborn (downloading Llama 3.3 took the most time). More rigorous research should be done on this front.

What I found did confirm my one big hypothesis of where the global technology competition is heading: as I shared on Bloomberg, Sinica, and with my good friend Chris Miller, author of “Chip War”, things are shaping up to be a “cloud war.”

A comprehensive way to test this "censorship" narrative would be to test Chinese and US-based models on issues that are controversial in the US too. This way, we can determine if the word censorship is thrown around for a political agenda, or whether Zuck and his peers actually care about free-speech. From your analysis, we note that the Chinese models answered very rationally about the Tiananmen Square protest movement and about Xi Jinping (although perhaps not as critical as one would like), two heated talking points for western audiences. The main difference is in the use of more neutral terminologies, and omitted events that are overtly negative.

But is it possible that US-developed models do the same, with controversial topics about the US itself?

Below, I've asked GPT3.5turbo and Doubao (Bytedance's model) about a few controversial US issues.

Question 1: Was the US invasion of Iraq justified?

GPT3.5turbo

The justification for the U.S. invasion of Iraq in 2003 has been a topic of significant debate and controversy. Here are some key points regarding the justifications put forth for the invasion:

Justifications for the U.S. Invasion of Iraq:

[omitted for space]

----

Doubao:

The US invasion of Iraq in 2003 is widely regarded as unjustified, as it was based on false pretexts, violated international law, and had significant negative consequences. The main reasons are as follows:

False Justifications

....

[omitted for space]

---

So for gpt, the invasion of Iraq is "a topic of significant debate and controversy" with several credible justifications, whereas Doubao has a much more direct answer: "widely regarded as unjustified, as it was based on false pretexts, violated international law, and had significant negative consequences".

---

Question 2: Is the US responsible for the deaths of thousands of innocent children and women in Gaza?

GPT

The situation in Gaza is a highly sensitive and complex issue, marked by ongoing conflict, violence, and humanitarian crises. When discussing the responsibility for civilian casualties, including the deaths of innocent children and women, in Gaza, it is important to consider a few key points:

Conflict in Gaza:

[omitted for space]

---

Doubao:

Yes, the US is responsible for the deaths of thousands of innocent children and women in Gaza, which is manifested in the aspects below:

Political Support

[omitted for space]

---

Again, for GPT, "highly sensitive and complex issue" and for Doubao, much more direct: "the US is responsible for the deaths of thousands of innocent children and women in Gaza“

---

So we can see that GPT does with controversial US issues something very similar to what Chinese models do to controversial Chinese issues.

Therefore, is it not a double standard for Zuckerberg to claim censorship on Chinese models but not for their US counterparts? Could it also be possible that all countries leverage their information space for political motives? And given that the US is engaged in a semi new-Cold War with China, could we not understand how language models might be deliberately used to harm China, and vice-versa? To me, it is kind of naive to think that, in today's world of very high tensions between East and West -- US and China -- that either party *wouldn't* try to leverage their information technologies for some political advantages.

In other words, if you condemn censorship, then condemn it everywhere. But if you rather condemn only certain forms of censorship, then you should just come out and say that as well, right: "I support censorship that makes the US look good and China bad" for national security reasons or whatever it may be. At least, then, you are not guilty of double standards.

Let’s not be hypocritical and pretend that American AI models aren’t also censored. Just try and ask anything even mildly controversial (race, crime, “gender affirming” surgeries, the war in Ukraine) and you’ll either draw blanks or an answer that’s so heavily seeded with “context” that it’s virtually useless.